Open one social media platform, and you are hit with a fake clip; open another, and you are hit with bigotry. In a news report, you will find that some victims are “dead” while others are “killed”. Each account of the events in Israel or Palestine seems to be based on different facts. It’s clear that misinformation, hate speeches, and factual distortions have become commonplace. How do we

Open one social media site and you’ll see a false video; read another, and bigotry will be your response. If you open a news article, you’ll see that some victims have been “killed,” while others are “dying.” Each account of what happened in Palestine and Israel appears to rely on a unique set of facts. It is obvious that false information, hate speech, and scientific distortions are pervasive.

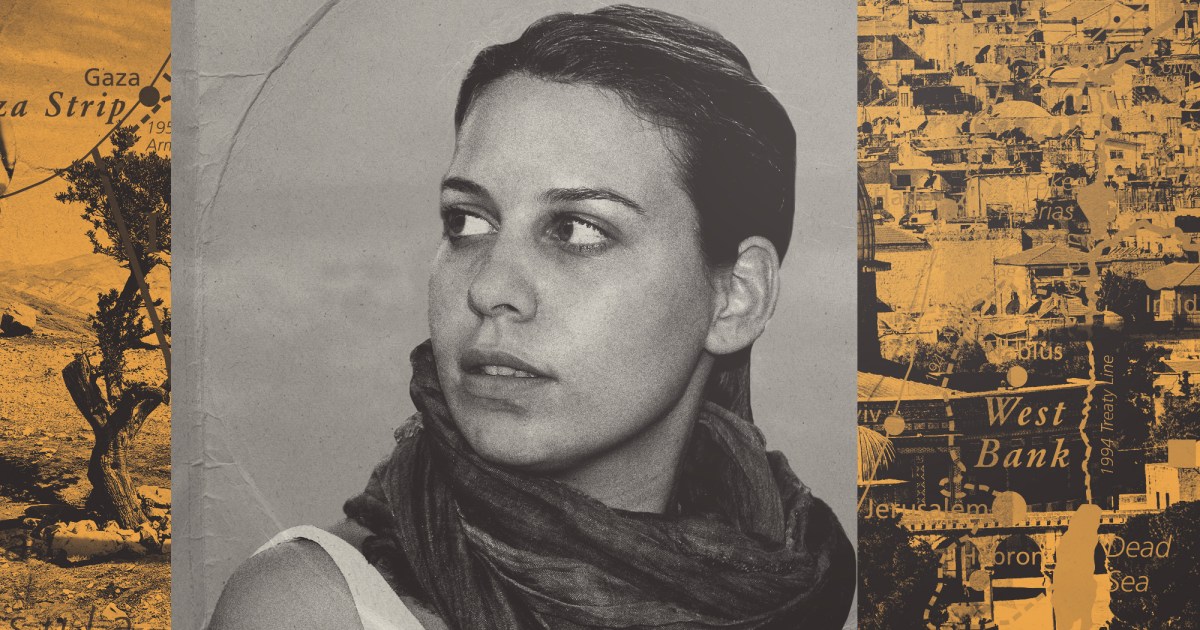

In such a landscape, how do we evaluate what we see? I discussed information networks related to Israel’s war in Gaza with experts in the media, politics, technology, and communications fields. The second interview in a five-part series, which even includes computer scientist Megan Squire, journalist and news analyst Dina Ibrahim, Bellingcat founder Eliot Higgins, and media researcher Tamara Kharroub, is with communications and policy expert Ayse Lokmanoglu.

Ayse Lokmanoglu, a mathematical social scientist and faculty member at Clemson University’s Media Forensics Hub, is an assistant professor there. In essence, what it means for the Islamic State to be online is examined in her award-winning research, which also tracks radicalization in the public sphere and analyzes the modern communications of “armed non-state actors.”

We discussed how social media contributes to social unrest and military conflict. Users should “have agency]to] slow down the spread” of modern misinformation, Lokmanoglu reminds us.

What role does cultural media play in spreading false information about Israel and Palestine?

Social media has many benefits. Previously, you could n’t find information from conflict areas; now, we have easier access to global information. It enables you to have a variety of different information in cases of authoritarian regimes because we can distinguish between the information we receive from state actors and that which comes directly from the ground.

We observed a shift in military information during the Arab Spring: English postcards and signs are now being used by people. That is a blatant indication that they want to reach an international audience rather than merely their local language borders. This is made possible by social media.

There is a greater need for information then that political tensions are at an all-time high. We have all of this demand, and we have the supply, thanks to social media. It results in a pretty quick exchange of information that is emotional. Everyone’s defenses are over. Because tensions are high, we need to take steps to verify the information. Because we have so much supply and demand, information integrity becomes really important.

When information is n’t validated, what happens?

People frequently state,” I do n’t want to share anything because I’m not sure what’s true anymore,” which is one of the most typical signs of this conflict. We experience the sense of not knowing what is correct and lacking the knowledge of how to confirm it.

As social media users, it’s crucial for us to evaluate the veracity of information. Platforms like X are reversing their stance on willing moderation. We used to be able to verify accounts using Blue checks [on X], but we’ve since lost that. Community notes are somewhat helpful, but they are not sufficient. Additionally, there is internal bias as a peer reviewing system, so it wo n’t be 100 %.

If you look at the Second World War, radios were used to spread information warfare. We’ve had media and false information for centuries. However, social media actually increased the reach, access, and speed of information. On numerous screens and in numerous locations, we are exposed to it many more frequently. Not just what we see on X, but also what our friends discuss in secret group chats, is what matters.

” You’re truly helping when you wait for other organizations to verify.”

We discovered that people occasionally share false information without realizing it. They are n’t actually doing due diligence because they trust the sources or the people who shared it.

Instead of disclosing the information right away, wait a moment and ask an honest organization to confirm it. Instead of participating in the fast sharing and liking, I believe we should slow down. You are truly helping the cause more than harming it when you wait for another organizations—reputable news organizations, respectable OSINT groups—to show it.

Your research has examined social media and fundamentalist violence. What impact are new social media regulations having on the spread of false information?

The spread of information, particularly that from extremists, is risky in my opinion because it can be extremely triggering. Sharing some of these things could be extremely exclusive, private, and cruel. When you share images of someone without knowing them, you are violating their individual or private rights.

Images have a higher retention rate, according to our research. Because you do n’t need language, you process things much more easily, which makes them hit your senses much harder. The tenseness and emotions are heightened by seeing images. You’re really straddling the lines between what should be shared and what should n’t be in both the Hamas attack and the Gaza bombing.

When the Islamic State was well-known online, they took steps to reduce harmful material while also developing solutions that did not censor information. Safety, mitigating, and lowering harm were the solutions. I’m hoping that these forums will reunite and discuss shared solutions more than unique ones.

What role does cultural media play in the rise of hate speech?

Even though the group you’re in is politically constructed in many ways and is constantly changing, having this information, mainly graphic images, really heightens the idea that it is” this group is our group.” When you feel like you’re under a target, it solidifies at that point.

Nasty rhetoric, or dehumanization, actually begins when we start to become very controversial and start losing the individual aspect.

” As users, we have the power to return to a time when social media was actually helping people.”

Nobody is correcting one another. Actually, it’s not socially acceptable to [not to do ] You will feel uneasy if you overhear someone using derogatory language next to you at a dinner party. If you’re conflict-avoidant, you might either correct them or leave or imply through social cues that it’s never politically acceptable. That needs to be reintroduced into social media.

What role does the technology behind cultural media play in the spread of false information?

Poor actors will want to provide information because there is a demand for it. Technology really aids them in getting past controlled images and videos and strong fakes. Many fictitious accounts can be used to “fact-check” one another. The algorithm pushes [certain content ] by unleashing bots and trolls that receive millions of likes.

Right now, it takes so little to recognize deep fakes when it comes to them. Additionally, you require significantly more sophisticated technology that we, as lay users, do not have. We have a force as users as much as we are aware of this and make an effort to address it. We are a group. We can prevent it from spreading. We should make an effort to return to a time when social media was effective; simply slow down to make changes to the times when it was really assisting us and enhancing the lives of others.

This interview has been condensed for clarity and quietly edited. Open one social media site and you’ll see a false video; read another, and bigotry will be your response. If you open a news article, you’ll see that some victims have been “killed,” while others are “dying.” Each account of what happened in Palestine and Israel appears to rely on a unique set of facts. It is obvious that false information, hate speech, and scientific distortions are pervasive. What should we do?

Open one social media platform, and you are hit with a fake clip; open another, and you are hit with bigotry. In a news report, you will find that some victims are “dead” while others are “killed”. Each account of the events in Israel or Palestine seems to be based on different facts. It’s clear that misinformation, hate speeches, and factual distortions have become commonplace. How do we

Mother Jones; Getty; Photo courtesy of Ayse Deniz Lokmanoglu. Fight disinformation: Sign up for the free Mother Jones Daily newsletter and follow the news that matters.. Open one social media platform and you’re hit with a fake video; open another and you’re hit with bigotry. Open a news article, and you’ll find some victims “killed” but others “dying.” Each account of events in Israel and Palestine seems to rely on different facts. What’s clear is that misinformation, hate speech, and factual distortions are running rampant.. How do we vet what we see in such a landscape? I spoke to experts across the field of media, politics, tech, and communications about information networks around Israel’s war in Gaza. This interview, with communications and policy scholar Ayse Lokmanoglu, is the fifth in a five-part series that also includes computer scientist Megan Squire, journalist and news analyst Dina Ibrahim, Bellingcat founder Eliot Higgins, and media researcher Tamara Kharroub.. Computational social scientist Ayse Lokmanoglu is an assistant professor atClemson University and a faculty member at its Media Forensics Hub. Her award-winning research examines the effectiveness of extremist disinformation, tracks radicalization in the public sphere, and analyzes the digital communications of “armed non-state actors”—essentially, what it means for the Islamic State to be on the internet.. We spoke about the role of social media in armed conflicts and political tension. Users, Lokmanoglu reminds us, “have agency [to] slow down the spread” of digital misinformation.. What’s the significance of social media for misinformation regarding around Israel and Palestine?. Social media has a lot of advantages. We have easier access to global information—previously, you couldn’t get information from conflict areas. In cases of authoritarian regimes, it allows you to have a variety of different information—we can see the differences between what we get from state actors vs. what we get from the ground.. In the Arab Spring, we saw a change in tactical information: You see people using English postcards and English signs. That is a clear sign that they want to reach more than just their territorial language borders—they want to reach a global audience. Social media facilitates this.. Now, when we have very politically tense events and conflicts, there’s more demand for information. Social media provides a marketplace: We have all this demand, and we have the supply. It creates this very fast information exchange that is filled with emotion. Everyone’s guard is down. We need measures to validate the information, because tensions are high. Information integrity becomes very important because we have all this supply and demand.. What happens when information isn’t validated?. One of the most common things I’ve seen about this conflict is people saying “I don’t want to share anything because I don’t know what’s true anymore.” We have the sense of the sense of not knowing what’s true and not knowing how to verify the truth.. It’s important for us as consumers of social media to gauge information integrity. We’re seeing platforms such as X take a step back on content moderation. Blue checks [on X] used to help people verify accounts, and now we’ve lost that. Community notes are helping to some level, but they’re not fast enough. Also, as a peer reviewing system, there is internal bias, so it’s not going to be 100%.. We’ve had media and misinformation for centuries—if you look at the Second World War, radios were used to spread information warfare. [But] the reach, access, and speed of information were really amplified by social media. We’re exposed to it much more, on multiple screens, in multiple places. It’s not only what we see on X, it’s also what our friends share, what’s in private group chats.. “When you wait for other organizations to verify, you’re actually helping.”. What we found is that when people share disinformation, they’re sometimes doing it unconsciously. They trust the sources, or they trust other people who shared it, [so] they’re not actually doing due diligence.. Rather than sharing immediately, take a moment and wait for some reputable organization to verify the information. I think what we need to do is slow down rather than be part of the immediate sharing and liking. When you wait for other organizations—reputable news organizations, reputable OSINT organizations—to verify it, you are actually helping the cause more than harming.. Your research has looked at extremist violence and social media. How are recent social media policies affecting the spread of disinformation?. I do think the spread of information, especially extremist information, is dangerous because it can be very triggering. It could be very personal, private, and inhumane to share some of these things. You go into the personal or private rights of people when sharing images of [them] without knowing them.. Our research has shown that images have a higher retention rate. They hit your senses much more: because you don’t need language, you process much more easily. Seeing images intensifies the tenseness and intensifies the emotions. In both cases, in the Hamas attack and the Gaza bombing, you’re really blurring these lines of what should be shared and what’s not to share.. For the Islamic State, when they were prominent on the internet, there were initiatives to moderate harmful content, but also come up with solutions that were not censoring information. The solutions were for safety and for mitigating and minimizing harm. My hope is that theseplatforms come together again and talk about common solutions, rather than individual.. How does social media contribute to the rise of hate speech?. When you have this information, especially graphic images, it really heightens the “this group is our group” notions—even though the group you’re in is socially constructed in many ways and is very fluid and changing. It becomes solidified at the moment when you feel like you’re under a target.. When we start really polarizing, and start losing the human aspect, that’s when hateful rhetoric, dehumanization, really starts.. “We have force as users…to go back to the place where social media was benefiting people on the ground.”. No one is correcting each other. It’s not actually socially acceptable [not to do]. If you hear someone say hateful rhetoric next to you at a dinner party, you will be uncomfortable. You would either correct them or, if you’re conflict-avoidant, leave or hint through social cues that it’s not socially acceptable. We need to bring that back into social media.. How does the technology behind social media contribute to the spread of misinformation?. Since there’s a demand for information, bad actors will want to supply. Technology really helps them through deep fakes and manipulated images and videos. You can have multiple fake accounts “fact-checking” each other. You can also unleash bots and trolls that give millions of likes so the algorithm pushes [certain content].. When it comes to deep fakes, right now, it takes so much to identify them. You also need much more advanced technology that as lay users, we don’t have. As long as we’re aware, and we try to address this, we have a force as users. We have agency. We can slow the spread. We should try to go back to the place where social media was good—just slow down a little bit to actually change social media, back to when it was actually helping us and benefiting people on the ground.. This interview has been lightly edited and condensed for clarity.